Robotics and Autonomous Systems (REU) -- Summer 2026

The Department of Computer Science at University of Southern California offers a 10-week summer research program for undergraduates in Robotics and Autonomous Systems. USC has a large and well established robotics research program that ranges from theoretical to experimental and systems-oriented. USC is a leader in the societally relevant area of robotics for healthcare and at-risk populations (children, the elderly, veterans, etc.); networked robotics for scientific discovery, covering for example environmental monitoring, target tracking, and formation control; using underwater, ground, and aerial robots; and control, machine learning, and perceptual algorithms for grasping, manipulation, and locomotion of humanoid robots. For a comprehensive resource on USC robotics see http://rasc.usc.edu.

Undergraduates in the program will gain research experience spanning the spectrum of cutting edge research topics in robotics. They will also gain exposure to robotics research beyond the scope of the REU site, through seminars by other USC faculty and external site visits, to aid in planning their next career steps. External visits may include trips to the USC Information Sciences Institute (ISI) in Marina del Ray, one of the world's leading research centers in the fields of computer science and information technology; the USC Institute for Creative Technologies (ICT) in Playa Vista, whose technologies for virtual reality and computer simulation have produced engaging, new, immersive technologies for learning, training, and operational environments; as well as NASA Jet Propulsion Laboratory (JPL) in Pasadena, which has led the world in exploring the solar system's known planets with robotic spacecraft.

Robotics is an interdisciplinary field, involving expertise in computer science, mechanical engineering, electrical engineering, but also fields outside engineering; this gives the REU students an opportunity to learn about different fields and the broad nature of research. Thus, we welcome applications from students in computer science and all fields of engineering, as well as other fields including neuroscience, psychology, kinesiology, etc. In addition to participating in seminars and social events, students will also prepare a final written report and present their projects to the rest of the institute at the end of the summer.

This Research Experiences for Undergraduates (REU) site is supported by a grant from the National Science Foundation (2447397).

Contact Daniel Seita (seita@usc.edu) with questions.

The REU application opens on November 24, 2025

Research Projects

When you apply, we will ask you to rank your top three interests from the research projects listed below. We encourage applicants to explore each mentor’s website to learn more about the individual research activities of each lab.

Socially Assistive Robotics

Projects focus on developing socially assistive robot (SAR) systems based on AI/machine learning and capable of perceiving and understanding user activity, goals, and affect in real time, from multimodal and heterogeneous data (video, audio, physiologic sensor wearables), aiding the user through social interactions that combine monitoring, coaching, motivation, and companionship, and personalizing and adapting to the user within an interaction, between interactions, and over time in order to support health, education, and training contexts.

Projects focus on developing socially assistive robot (SAR) systems based on AI/machine learning and capable of perceiving and understanding user activity, goals, and affect in real time, from multimodal and heterogeneous data (video, audio, physiologic sensor wearables), aiding the user through social interactions that combine monitoring, coaching, motivation, and companionship, and personalizing and adapting to the user within an interaction, between interactions, and over time in order to support health, education, and training contexts.

Sample Project: Developing SAR software: The student will work with PhD students involved in developing a socially assistive robot for use by university students in college dorms, to facilitate their practice of emotion-regulation skills such as cognitive behavioral therapy, mindfulness, etc., in order to mitigate anxiety and depression. The student will aid in gathering relevant literature both in robotics and in social science and behavioral science, aid in the development of software for the user interfaces (robot, tangibles, screens, web) and behavioral interventions, as well as multimodal data analysis and model learning. This applies to various projects in the PI’s lab, with different user populations: children with autism, children with CP, K-12 students, university students, adolescents/young adults, stroke patients, elderly with dementia, and healthy / typical users.

Expected Background: The student needs some level of Python or other programming experience.

Faculty Mentor

Autonomy for Aerial Robots

Relevant projects focus on developing networked multi-robot systems capable of communicating among themselves and unattended, immobile, wireless sensors.

Sample Project: Developing software for aerial robots: The student will work with PhD students involved in developing teams of autonomous aerial robots. The student will work on gathering and studying relevant literature and aid in the development of visualization, control, planning, and navigation software for these systems.

Expected Background: The student must have software development experience at the level of an introductory CS programming class. All other training will be part of the 10-week REU.

Faculty Mentor

Data Augmentation for Bimanual Robot Manipulation

This project focuses on developing new data-augmentation methods to improve learning-based robot manipulation, with an emphasis on generating diverse, physically consistent examples for challenging dual-arm tasks. The student will work with state-of-the-art generative models, such as diffusion models for synthesizing novel robot poses or video-conditioned models for creating new demonstrations, to expand limited real-world datasets without additional data collection. Once these augmented datasets are created, the student will test how they improve performance on bimanual nonprehensile manipulation tasks where robots must coordinate two arms to push, slide, lift, or stabilize objects through contact rather than grasping. Depending on interest, the student may explore viewpoint augmentation, RGB-D augmentation, cross-embodiment transfer, or contact-aware augmentation techniques that preserve task feasibility. The goal is to understand how synthetic data can enable robots to generalize across new objects, configurations, and embodiments, ultimately making complex dual-arm manipulation more robust and scalable.

This project focuses on developing new data-augmentation methods to improve learning-based robot manipulation, with an emphasis on generating diverse, physically consistent examples for challenging dual-arm tasks. The student will work with state-of-the-art generative models, such as diffusion models for synthesizing novel robot poses or video-conditioned models for creating new demonstrations, to expand limited real-world datasets without additional data collection. Once these augmented datasets are created, the student will test how they improve performance on bimanual nonprehensile manipulation tasks where robots must coordinate two arms to push, slide, lift, or stabilize objects through contact rather than grasping. Depending on interest, the student may explore viewpoint augmentation, RGB-D augmentation, cross-embodiment transfer, or contact-aware augmentation techniques that preserve task feasibility. The goal is to understand how synthetic data can enable robots to generalize across new objects, configurations, and embodiments, ultimately making complex dual-arm manipulation more robust and scalable.

Prerequisites: basic machine learning and computer vision knowledge, solid Python programming skills.

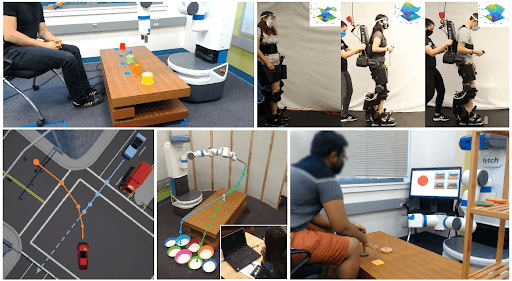

Robot learning from natural human feedback

We aim to make post-deployment robot learning accessible, efficient, and practical for everyday users by enabling robots to learn from natural human feedback rather than large collections of expert demonstrations. The project develops algorithms that allow generalist robotic models to adapt to new tasks using intuitive feedback, such as natural language, eye gaze, human demonstrations performed without the robot, and real-time user interventions or corrections. To this end, this project involves research directions around (1) learning from natural, low-burden feedback by grounding robot behavior in shared representations with language and human actions; (2) modeling human teaching behavior to improve data-efficiency; and (3) developing continual learning methods that treat human interventions and corrections as rich probabilistic signals for continual adaptation. Together, these contributions will enable robots to be teachable and safe in real-world, human-centered environments, significantly lowering barriers to robot use.

We aim to make post-deployment robot learning accessible, efficient, and practical for everyday users by enabling robots to learn from natural human feedback rather than large collections of expert demonstrations. The project develops algorithms that allow generalist robotic models to adapt to new tasks using intuitive feedback, such as natural language, eye gaze, human demonstrations performed without the robot, and real-time user interventions or corrections. To this end, this project involves research directions around (1) learning from natural, low-burden feedback by grounding robot behavior in shared representations with language and human actions; (2) modeling human teaching behavior to improve data-efficiency; and (3) developing continual learning methods that treat human interventions and corrections as rich probabilistic signals for continual adaptation. Together, these contributions will enable robots to be teachable and safe in real-world, human-centered environments, significantly lowering barriers to robot use.

Prerequisites: hands-on experience with basic machine learning methods, solid Python programming skills.

Faculty Mentor

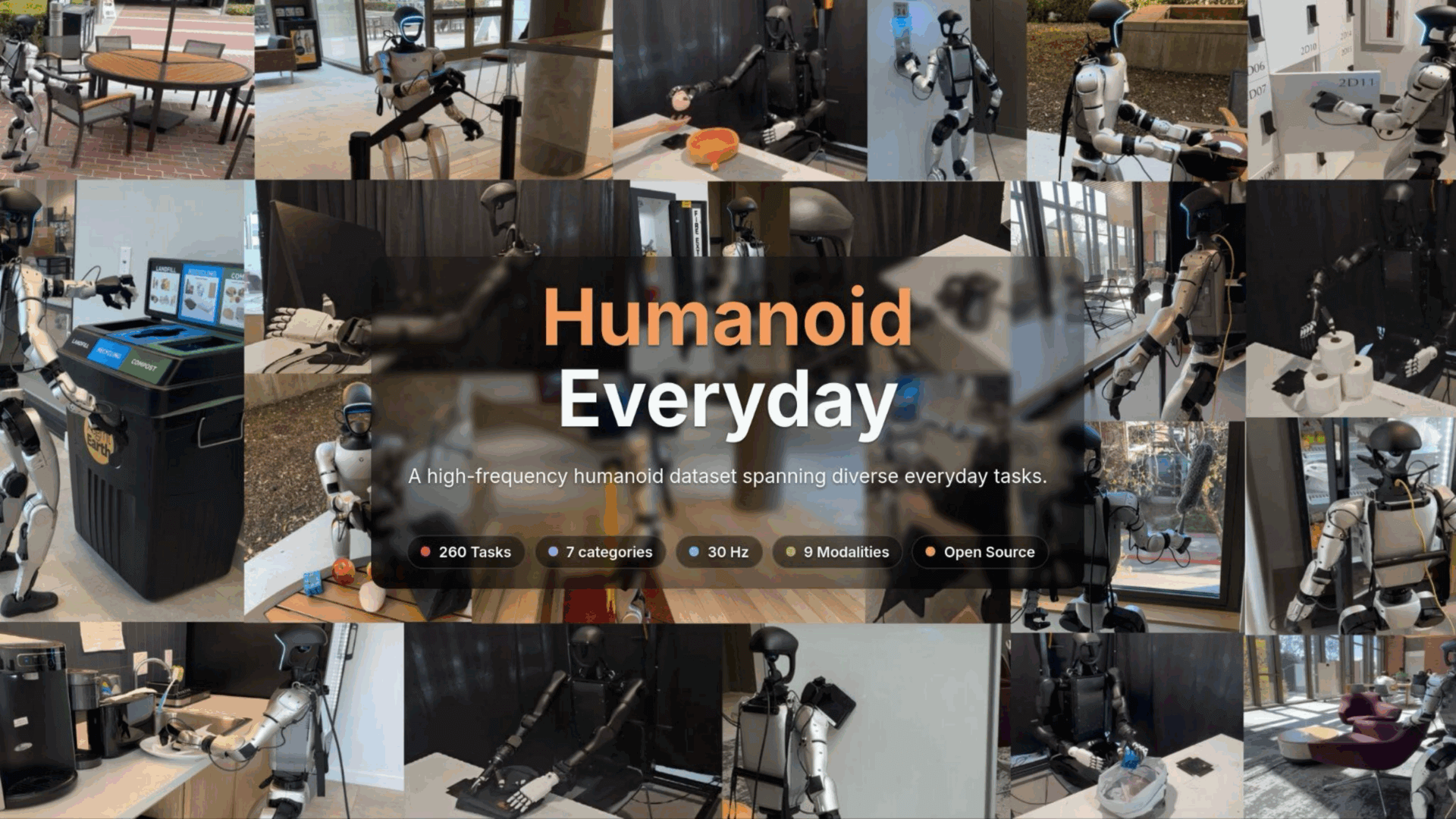

Humanoid Vision-Language-Action (VLA) Systems

Our group is developing integrated humanoid Vision-Language-Action (VLA) systems that enable robots to understand and interact with the physical world through perception, reasoning, and embodied control. This research spans the complete lifecycle of model development—from pretraining large-scale multimodal representations, to post-training and fine-tuning through reinforcement and human feedback, to deployment on real humanoid platforms. At the pretraining stage, we focus on aligning 3D perception, language, and motor skills using massive embodied datasets and simulation environments. Post-training efforts emphasize adaptive learning and safe policy refinement for complex manipulation and locomotion tasks. Finally, deployment studies examine real-world transfer, robustness, and generalization across diverse humanoid systems such as Unitree and Dexmate. This line of work bridges machine learning, robotics, and geometric vision, aiming to create scalable embodied intelligence capable of grounded understanding and purposeful action. It forms a central pillar of our lab’s broader mission—building physically grounded, data-driven digital twins and humanoid agents that learn from experience and operate safely and autonomously in dynamic environments.

Our group is developing integrated humanoid Vision-Language-Action (VLA) systems that enable robots to understand and interact with the physical world through perception, reasoning, and embodied control. This research spans the complete lifecycle of model development—from pretraining large-scale multimodal representations, to post-training and fine-tuning through reinforcement and human feedback, to deployment on real humanoid platforms. At the pretraining stage, we focus on aligning 3D perception, language, and motor skills using massive embodied datasets and simulation environments. Post-training efforts emphasize adaptive learning and safe policy refinement for complex manipulation and locomotion tasks. Finally, deployment studies examine real-world transfer, robustness, and generalization across diverse humanoid systems such as Unitree and Dexmate. This line of work bridges machine learning, robotics, and geometric vision, aiming to create scalable embodied intelligence capable of grounded understanding and purposeful action. It forms a central pillar of our lab’s broader mission—building physically grounded, data-driven digital twins and humanoid agents that learn from experience and operate safely and autonomously in dynamic environments.

Prerequisites: basic machine learning, computer vision, and robotic knowledge.

Faculty Mentor

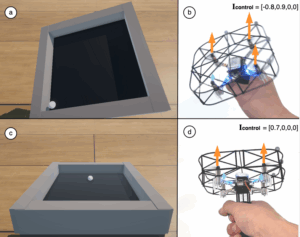

Haptics for Virtual Reality

This project explores how small drones can serve as flying haptic interfaces that bring touch into virtual and augmented reality experiences. Students will work on developing and testing “safe-to-touch” quadcopters that can move around users and deliver physical feedback, like gentle pushes, taps, or resistive forces, exactly where a virtual object would be felt. The goal is to make digital interactions more tangible and immersive, blending the boundaries between the real and virtual. Depending on interests, students may contribute to areas such as human–robot interaction studies, control and localization of drones, or design of novel soft structures for physical contact.

This project explores how small drones can serve as flying haptic interfaces that bring touch into virtual and augmented reality experiences. Students will work on developing and testing “safe-to-touch” quadcopters that can move around users and deliver physical feedback, like gentle pushes, taps, or resistive forces, exactly where a virtual object would be felt. The goal is to make digital interactions more tangible and immersive, blending the boundaries between the real and virtual. Depending on interests, students may contribute to areas such as human–robot interaction studies, control and localization of drones, or design of novel soft structures for physical contact.

Expected Background: Students should have prior coding experience (Python preferred). Some prior experience with hardware and/or ROS is preferred, but not required.

Robot Locomotion and Sensing for Earth and Planetary Explorations

Physical environments can provide a variety of interaction opportunities for robots to exploit towards their locomotion goals. However, it is unclear how to even extract information about – much less exploit – these opportunities from physical properties (e.g., shape, size, distribution) of the environment. This project integrates mechanical engineering, electrical engineering, computer science, and physics, to discover the general principles governing the interactions between bio-inspired robots and their locomotion environments, and uses these principles to create novel control, sensing, and navigation strategies for robots to effectively move through non-flat, non-rigid, complex terrains to support applications in Earth and planetary explorations.

Physical environments can provide a variety of interaction opportunities for robots to exploit towards their locomotion goals. However, it is unclear how to even extract information about – much less exploit – these opportunities from physical properties (e.g., shape, size, distribution) of the environment. This project integrates mechanical engineering, electrical engineering, computer science, and physics, to discover the general principles governing the interactions between bio-inspired robots and their locomotion environments, and uses these principles to create novel control, sensing, and navigation strategies for robots to effectively move through non-flat, non-rigid, complex terrains to support applications in Earth and planetary explorations.

Selected candidates will assist in development of robotic platforms (Arduino, Raspberry Pi, SolidWorks, 3D Printing), experiment data collection and analysis (motion capture tracking, force measurements), modelling and simulation (MATLAB, Python, C++, ROS).

Faculty Mentor

Guiding Vision-Language-Action Models to Learn Verifiable Robot Policies with Minimal Sim2Real Gap

Recent results on vision-language-action models show a lot of promise in learning robot policies that directly map sensor observations to robot actions. However, these policies typically do not provide any guarantees on the safety or performance of the robot. In this project, we will explore how a robot’s task and safety objectives could be encoded using logical formalisms and how neuro-symbolic methods could be used to train the robot’s policies in a way that guarantees the performance or safety of the robot.

Some exposure to logic would be ideal. The intern should have some knowledge of using and training VLAs or other kinds of multi-modal foundation models.

Faculty Mentor

Location and Housing

USC is located near downtown Los Angeles. There are numerous restaurants and stores on or adjacent to campus, including Target and Trader Joe’s. USC’s campus is directly linked to downtown LA and downtown Santa Monica with the Expo line light rail, so you have easy access to cultural attractions as well as shopping and the beach! A stipend will be provided to students. We will additionally provide housing and compensation for meals.

Benefits

- Work with some of the leading researchers in robotics and autonomy.

- Receive a stipend of $6,100 for the entire duration of the program. We will additionally provide housing and compensation for meals.

Eligibility

- U.S. citizenship or permanent residency is required.

- Students must be currently enrolled in an undergraduate program.

- Students must not have completed an undergraduate degree prior to the summer program.

Important Dates

- Application opens: November 24, 2025

- Application deadline: January 11, 2026

- Notification of acceptance begins: January 30, 2026

- Notification of declined applicants: March 10, 2026

- Start Date: May 26, 2026

- End Date: Friday, July 31, 2026

How to Apply

Application Form

Fill out the online REU application (when the application is open, the button below will contain a link).

You must first complete the application form before the rest of your application materials will be reviewed!

Application opens: November 24, 2025

Application deadline: January 11, 2026

Supplemental Materials

Upload the following materials to the online REU application form:

- The most recent unofficial transcripts from all undergraduate institutions you have attended.

-

Your resume / CV.

- A one page personal statement. This may include your research interests and how you came to be interested in them, your previous research experiences, your reasons for wanting to participate in the research at USC, and how participating in this experience might better prepare you to meet your future goals.

Recommendation Letter

Request a faculty member to send a letter of recommendation directly to the program administrator.

Email: seita@usc.edu

Subject: “REU Site Recommendation: Your full name here” (e.g. “REU Site Recommendation: Leslie Smith” )

Filename: Lastname_Firstname_Recommendation.pdf

Please note that due to the large number of responses, it is not possible to confirm the receipt of individual letters. Applicants are encouraged to check with their recommender directly to confirm that the letter has been submitted.

This project focuses on coordinating teams of robots to autonomously create desired shapes and patterns with minimal user input and minimal communication. Inspired by human abilities to self-localize and self-organize, the research focuses on underlying algorithms for self-localization using information collected by a robots' onboard sensors. We have run several human studies using an online multi-player interface we developed for our NSF-funded project. Using the interface, participants interact to form shapes in a playing field, communicating only through implicit means provided by the interface. The research involves a combination of designing and testing algorithms for shape formation, coordination, control, and implementation on a testbed of 20 robots specifically designed for this task.

This project focuses on coordinating teams of robots to autonomously create desired shapes and patterns with minimal user input and minimal communication. Inspired by human abilities to self-localize and self-organize, the research focuses on underlying algorithms for self-localization using information collected by a robots' onboard sensors. We have run several human studies using an online multi-player interface we developed for our NSF-funded project. Using the interface, participants interact to form shapes in a playing field, communicating only through implicit means provided by the interface. The research involves a combination of designing and testing algorithms for shape formation, coordination, control, and implementation on a testbed of 20 robots specifically designed for this task.