Robotics and Autonomous Systems (REU)

The Department of Computer Science at University of Southern California offers a 10-week summer research program for undergraduates in Robotics and Autonomous Systems. USC has a large and well established robotics research program that ranges from theoretical to experimental and systems-oriented. USC is a leader in the societally relevant area of robotics for healthcare and at-risk populations (children, the elderly, veterans, etc.); networked robotics for scientific discovery, covering for example environmental monitoring, target tracking, and formation control; using underwater, ground, and aerial robots; and control, machine learning, and perceptual algorithms for grasping, manipulation, and locomotion of humanoid robots. For a comprehensive resource on USC robotics see http://rasc.usc.edu.

Undergraduates in the program will gain research experience spanning the spectrum of cutting edge research topics in robotics. They will also gain exposure to robotics research beyond the scope of the REU site, through seminars by other USC faculty and external site visits, to aid in planning their next career steps. External visits may include trips to the USC Information Sciences Institute (ISI) in Marina del Ray, one of the world's leading research centers in the fields of computer science and information technology; the USC Institute for Creative Technologies (ICT) in Playa Vista, whose technologies for virtual reality and computer simulation have produced engaging, new, immersive technologies for learning, training, and operational environments; as well as NASA Jet Propulsion Laboratory (JPL) in Pasadena, which has led the world in exploring the solar system's known planets with robotic spacecraft.

Robotics is an interdisciplinary field, involving expertise in computer science, mechanical engineering, electrical engineering, but also fields outside engineering; this gives the REU students an opportunity to learn about different fields and the broad nature of research. Thus, we welcome applications from students in computer science and all fields of engineering, as well as other fields including neuroscience, psychology, kinesiology, etc. In addition to participating in seminars and social events, students will also prepare a final written report and present their projects to the rest of the institute at the end of the summer.

This Research Experiences for Undergraduates (REU) site is supported by a grant from the National Science Foundation (CNS-2051117). The site focuses on recruiting a diverse set of participants, as well as students from underrepresented groups.

For general questions or additional information, please contact us using the form below.

For students interested in the Robotics and Autonomous Systems (REU) for Summer 2024, please join our interest list via the button below.

The REU application opens on February 13th 2024

Research Projects

May 28, 2024 - August 2, 2024

When you apply, we will ask you to rank your top three interests from the research projects listed below. We encourage applicants to explore each mentor’s website to learn more about the individual research activities of each lab.

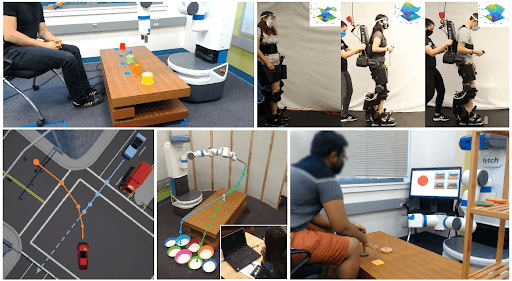

Socially Assistive Robotics

Our new interdisciplinary NSF-supported project is developing novel interfaces to enable people with physical disabilities to program, so they can have better access to employment in computing. We will be developing, testing, and evaluating a broad range of interfaces (voice, gesture, speech, micro-expressions, facial expressions, body movements, pedals, etc.) and developing machine learning methods to model human physical abilities and map them to customized interfaces. This is part of our lab’s work on socially assistive devices and robotics, systems capable of aiding people through interactions that combine monitoring, coaching, motivation, and companionship. We develop human-machine interaction algorithms (involving control and learning in complex, dynamic, and uncertain environments by integrating on-line perception, representation, and interaction with people) and software for providing personalized assistance for accessibility as well as in convalescence, rehabilitation, training, and education. Our research involves a combination of algorithms and software, system integration, and human subjects evaluation studies design, execution, and data analysis. To address the inherently multidisciplinary challenges of this research, the work draws on theories, models, and collaborations from neuroscience, cognitive science, social science, health sciences, and education.

Our new interdisciplinary NSF-supported project is developing novel interfaces to enable people with physical disabilities to program, so they can have better access to employment in computing. We will be developing, testing, and evaluating a broad range of interfaces (voice, gesture, speech, micro-expressions, facial expressions, body movements, pedals, etc.) and developing machine learning methods to model human physical abilities and map them to customized interfaces. This is part of our lab’s work on socially assistive devices and robotics, systems capable of aiding people through interactions that combine monitoring, coaching, motivation, and companionship. We develop human-machine interaction algorithms (involving control and learning in complex, dynamic, and uncertain environments by integrating on-line perception, representation, and interaction with people) and software for providing personalized assistance for accessibility as well as in convalescence, rehabilitation, training, and education. Our research involves a combination of algorithms and software, system integration, and human subjects evaluation studies design, execution, and data analysis. To address the inherently multidisciplinary challenges of this research, the work draws on theories, models, and collaborations from neuroscience, cognitive science, social science, health sciences, and education.

Faculty Mentor

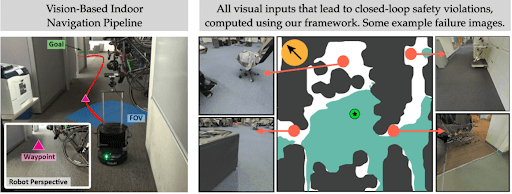

Detecting and Mitigating Anomalies in Vision-Based Controllers

Autonomous systems, such as self-driving cars and drones, have made significant strides in recent years by leveraging visual inputs and machine learning for decision-making and control. Despite their impressive performance, these vision-based controllers can make erroneous predictions when faced with novel or out-of-distribution inputs. Such errors can cascade to catastrophic system failures and compromise system safety, as exemplified by recent self-driving car accidents. In this project, we aim to design a run-time anomaly monitor to detect and mitigate such closed-loop, system-level failures. Specifically, we leverage a reachability-based framework to stress-test the vision-based controller offline and mine its system-level failures. This data is then used to train a classifier that is leveraged online to flag inputs that might cause system breakdowns. The anomaly detector highlights issues that transcend individual modules and pertain to the safety of the overall system. We will also design a fallback controller that robustly handles these detected anomalies to preserve system safety. In our preliminary work, we validate the proposed approach on an autonomous aircraft taxiing system that uses a vision-based controller for taxiing. Our results show the efficacy of the proposed approach in identifying and handling system-level anomalies, outperforming methods such as prediction error-based detection and ensembling, thereby enhancing the overall safety and robustness of autonomous systems. Preliminary experiment videos can be found at: https://phoenixrider12.github.io/failure_mitigation

Faculty Mentor

Robots and Contact

When robots operate in their environment, we normally wish for robots to avoid making any contact with obstacles. For example, we might want a robot arm to reach into a cluttered closet to grab a target item, and we would normally want the robot to avoid contact with anything other than that target item. But, what if it is OK to make some type of contacts? Sometimes it might even be necessary to make contact with obstacles in order to reach a target. Humans frequently make incidental contact with obstacles all the time, such as when we reach into backpacks to retrieve an item buried in it while making contact with other things in the bag. In this project, we will implement machine learning algorithms to create robots comfortable with making appropriate contact with environmental obstacles. The student will develop simulation environments of a robot manipulator in a cluttered setup to test our algorithms. Then we will move to a physical setup that closely mirrors the simulation environment.

Actively collecting language feedback for reward learning

Preference-based learning algorithms have been applied in an active way to learn reward functions in the most data-efficient way. Our recent works show humans’ language feedback can be leveraged to replace preference comparisons with trajectory-language feedback pairs. The goal of this project is to extend these new algorithms with active data collection so that the interaction with the human will be cheaper. Potential applications are in robotics and natural language processing.

Faculty Mentor

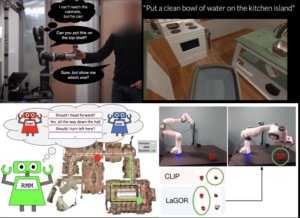

Language-guided Robots

Natural language can be used as a communication medium between people and robots. However, most existing natural language processing technology is based solely on written text. A language system that has read and memorized thousands of sentences using the word “hot” will be unable to warn a robot system about the physical sensor danger of touching a live stove burner when a person warns “Watch out, that’s hot!” This project will focus on developing grounded natural language processing models for human-robot collaboration that consider aspects of embodiment such as gestures and gaze, physical object properties, and the interplay between language and vision. Approaches will utilize tools and techniques from a broad range of disciplines, including natural language processing, computer vision, robotics, and linguistics.

Natural language can be used as a communication medium between people and robots. However, most existing natural language processing technology is based solely on written text. A language system that has read and memorized thousands of sentences using the word “hot” will be unable to warn a robot system about the physical sensor danger of touching a live stove burner when a person warns “Watch out, that’s hot!” This project will focus on developing grounded natural language processing models for human-robot collaboration that consider aspects of embodiment such as gestures and gaze, physical object properties, and the interplay between language and vision. Approaches will utilize tools and techniques from a broad range of disciplines, including natural language processing, computer vision, robotics, and linguistics.

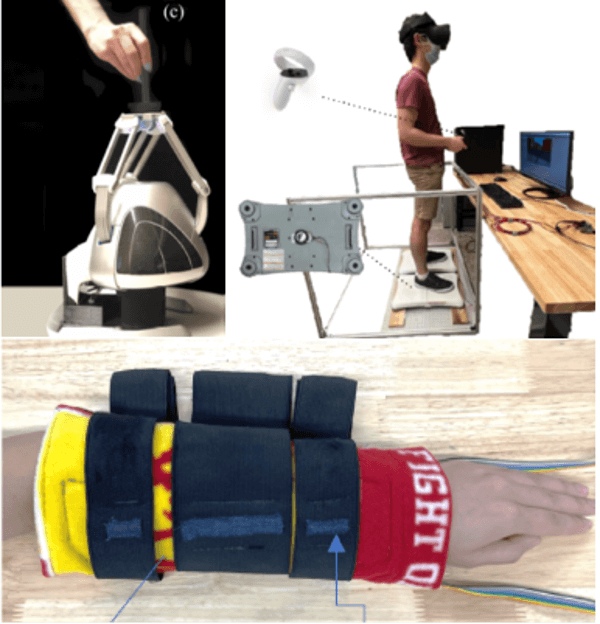

Haptics for Virtual Reality

This project focuses on the design, building, and control of haptic devices for virtual reality. Current VR systems lack any touch feedback, providing only visual and touch is a critical component for our interactions with the physical world and with other people. This research will investigate how we use our sense of touch to communicate with the physical world and use this knowledge to design haptic devices and rendering systems that allow users to interact with and communicate through the virtual world. To accomplish this, the project will integrate electronics, mechanical design, programming, and human perception to build and program a device to display artificial touch sensations to a user with the goal of creating a natural and realistic interaction.

This project focuses on the design, building, and control of haptic devices for virtual reality. Current VR systems lack any touch feedback, providing only visual and touch is a critical component for our interactions with the physical world and with other people. This research will investigate how we use our sense of touch to communicate with the physical world and use this knowledge to design haptic devices and rendering systems that allow users to interact with and communicate through the virtual world. To accomplish this, the project will integrate electronics, mechanical design, programming, and human perception to build and program a device to display artificial touch sensations to a user with the goal of creating a natural and realistic interaction.

Location and Housing

USC is located near downtown Los Angeles. There are numerous restaurants and stores on or adjacent to campus, including Target and Trader Joe’s. USC’s campus is directly linked to downtown LA and downtown Santa Monica with the Expo line light rail, so you have easy access to cultural attractions as well as shopping and the beach! A stipend will be provided to students. We will additionally provide housing and compensation for meals.

Benefits

- Work with some of the leading researchers in robotics and autonomy.

- Receive a stipend of $6000 for the entire duration of the program. We will additionally provide housing and compensation for meals.

Eligibility

- U.S. citizenship or permanent residency is required.

- Students must be currently enrolled in an undergraduate program.

- Students must not have completed an undergraduate degree prior to the summer program.

Important Dates

- Application opens: February 13, 2024

- Application deadline: February 28, 2024

- Notification of acceptance begins: March 13, 2024

- Notification of declined applicants: March 27, 2024

- Start Date: May 28, 2024

- End Date: August 2, 2024

How to Apply

Step 1

Application Form

Fill out the online REU application (when the application is open, the button below will contain a link).

You must first complete the application form before the rest of your application materials will be reviewed!

Application opens: February 13, 2024

Application deadline: February 28, 2024

Step 2

Supplemental Materials

Upload the following materials using password USC_CS_REU.

- The most recent unofficial transcripts from all undergraduate institutions you have attended.

- A one page personal statement. This may include your research interests and how you came to be interested in them, your previous research experiences, your reasons for wanting to participate in the research at USC, and how participating in this experience might better prepare you to meet your future goals.

Step 3

Recommendation Letter

Request a faculty member to send a letter of recommendation directly to the program administrator.

Email: nikolaid_at_usc_dot_edu

Subject: “REU Site Recommendation: Your full name here” (e.g. “REU Site Recommendation: Leslie Smith” )

Filename: Lastname_Firstname_Recommendation.pdf

Please note that due to the large number of responses, it is not possible to confirm the receipt of individual letters. Applicants are encouraged to check with their recommender directly to confirm that the letter has been submitted.

This project focuses on coordinating teams of robots to autonomously create desired shapes and patterns with minimal user input and minimal communication. Inspired by human abilities to self-localize and self-organize, the research focuses on underlying algorithms for self-localization using information collected by a robots' onboard sensors. We have run several human studies using an online multi-player interface we developed for our NSF-funded project. Using the interface, participants interact to form shapes in a playing field, communicating only through implicit means provided by the interface. The research involves a combination of designing and testing algorithms for shape formation, coordination, control, and implementation on a testbed of 20 robots specifically designed for this task.

This project focuses on coordinating teams of robots to autonomously create desired shapes and patterns with minimal user input and minimal communication. Inspired by human abilities to self-localize and self-organize, the research focuses on underlying algorithms for self-localization using information collected by a robots' onboard sensors. We have run several human studies using an online multi-player interface we developed for our NSF-funded project. Using the interface, participants interact to form shapes in a playing field, communicating only through implicit means provided by the interface. The research involves a combination of designing and testing algorithms for shape formation, coordination, control, and implementation on a testbed of 20 robots specifically designed for this task.